Federated Learning under Presence of Label Noise

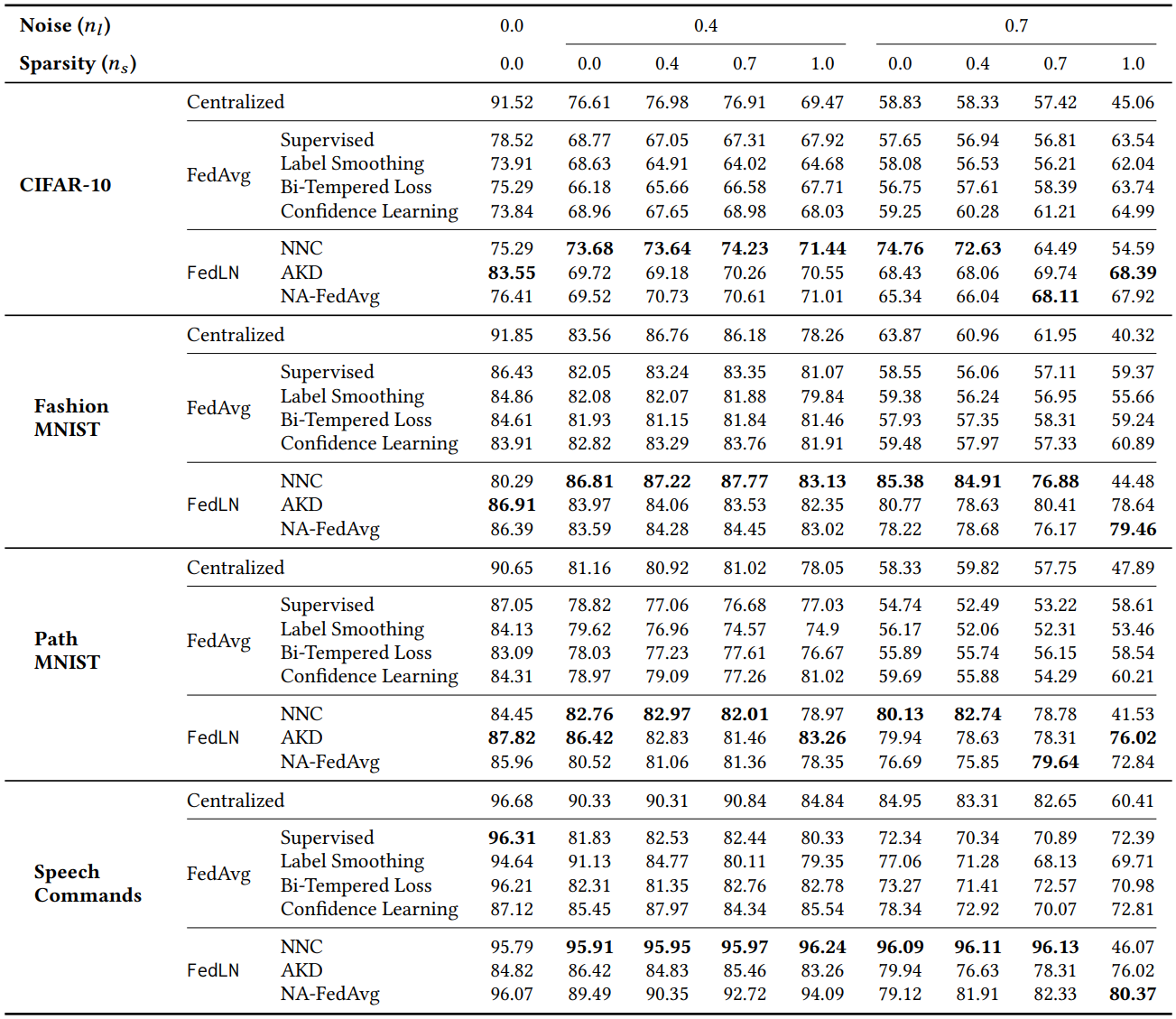

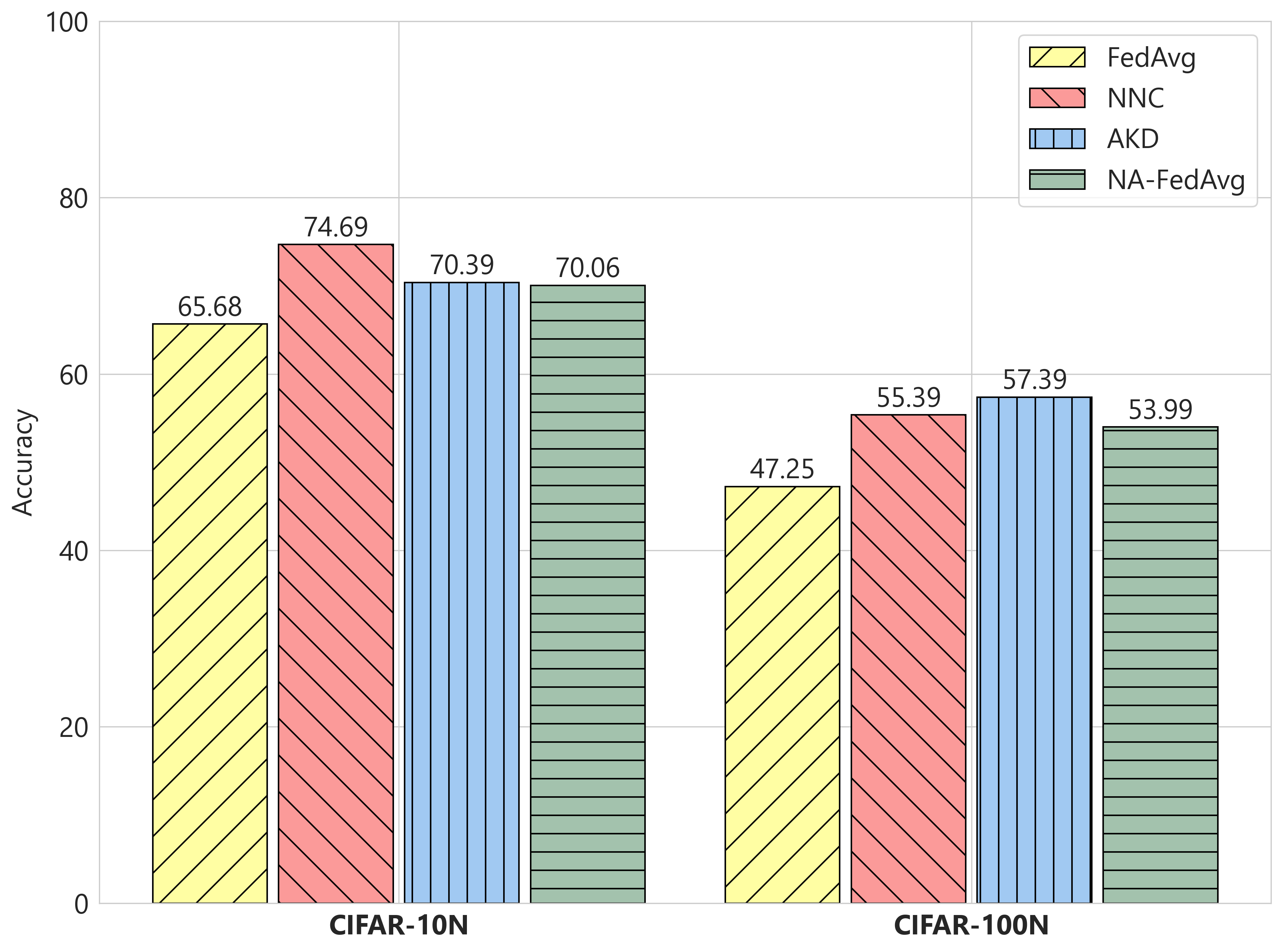

Under a federated learning regime, clients' local data follow a non-i.i.d. distribution both in terms of data samples and noise profiles, while no global entity can directly access the complete pool of data, rendering data-centric and model decision-based centralized approaches infeasible or ineffective in FL. In FedLN, we exploit per-client noise level estimates to mitigate the effect of noisy labels on the federated model's performance.

|

Adaptive Knowledge Distillation (AKD) |

Noise-aware Federated Averaging (NA-FedAvg) |

|

|

|

|

Deep Nearest Neighbor-based Correction (NNC) |

|